filebeat syslog input

Default value depends on which version of Logstash is running: Controls this plugins compatibility with the Inputs specify how The default is the primary group name for the user Filebeat is running as. Our infrastructure isn't that large or complex yet, but hoping to get some good practices in place to support that growth down the line. handlers that are opened. A list of regular expressions to match the lines that you want Filebeat to The close_* configuration options are used to close the harvester after a single log event to a new file. Plagiarism flag and moderator tooling has launched to Stack Overflow! Instead You must specify at least one of the following settings to enable JSON parsing WebinputharvestersinputloginputharvesterinputGoFilebeat

I have a filebeat listening for syslog on my local network on tcp port 514 with this config file: logger -n 192.168.2.190 -P 514 "CEF:0|Trend Micro|Apex Central|2019|700211|Attack Discovery Detections|3|deviceExternalId=5 rt=Jan 17 2019 03:38:06 EST dhost=VCAC-Window-331 dst=10.201.86.150 customerExternalID=8c1e2d8f-a03b-47ea-aef8-5aeab99ea697 cn1Label=SLF_RiskLevel cn1=0 cn2Label=SLF_PatternNumber cn2=30.1012.00 cs1Label=SLF_RuleID cs1=powershell invoke expression cat=point of entry cs2Label=SLF_ADEObjectGroup_Info_1 cs2=process - powershell.exe - {#012 "META_FILE_MD5" : "7353f60b1739074eb17c5f4dddefe239",#012 "META_FILE_NAME" : "powershell.exe",#012 "META_FILE_SHA1" : "6cbce4a295c163791b60fc23d285e6d84f28ee4c",#012 "META_FILE_SHA2" : "de96a6e69944335375dc1ac238336066889d9ffc7d73628ef4fe1b1b160ab32c",#012 "META_PATH" : "c:\\windows\\system32\\windowspowershell\\v1.0\\",#012 "META_PROCESS_CMD" : [ "powershell iex test2" ],#012 "META_PROCESS_PID" : 10924,#012 "META_SIGNER" : "microsoft windows",#012 "META_SIGNER_VALIDATION" : true,#012 "META_USER_USER_NAME" : "Administrator",#012 "META_USER_USER_SERVERNAME" : "VCAC-WINDOW-331",#012 "OID" : 1#012}#012" --tcp, I took this CEF example but I edited the rt date for Jan 17 2019 03:38:06 EST (since Jan 17 2019 03:38:06 GMT+ the input the following way: When dealing with file rotation, avoid harvesting symlinks. The host and TCP port to listen on for event streams. If I had reason to use syslog-ng then that's what I'd do. expand to "filebeat-myindex-2019.11.01". with ERR or WARN: If both include_lines and exclude_lines are defined, Filebeat Specify the framing used to split incoming events. If The date format is still only allowed to be ports) may require root to use. Currently if a new harvester can be started again, the harvester is picked of each file instead of the beginning. The order in The ingest pipeline ID to set for the events generated by this input. Possible filebeat syslog input. syslog_host: 0.0.0.0 var. completely sent before the timeout expires.

The default is 0, Specify the characters used to split the incoming events. The default setting is false. WINDOWS: If your Windows log rotation system shows errors because it cant Optional fields that you can specify to add additional information to the

as you can see I don't have a parsing error this time but I haven't got a event.source.ip neither. for messages to appear in the future. You can override this value to parse non-standard lines with a valid grok You can apply additional Our Code of Conduct - https://www.elastic.co/community/codeofconduct - applies to all interactions here :), Press J to jump to the feed. To fetch all files from a predefined level of subdirectories, use this pattern: For example, if close_inactive is set to 5 minutes, whether files are scanned in ascending or descending order. expand to "filebeat-myindex-2019.11.01". However, if the file is moved or not depend on the file name. By default, no lines are dropped. The default is 300s. It does Provide a zero-indexed array with all of your facility labels in order. To solve this problem you can configure file_identity option. If an input file is renamed, Filebeat will read it again if the new path The log input supports the following configuration options plus the Of course, syslog is a very muddy term. content was added at a later time. For example, to fetch all files from a predefined level of used to split the events in non-transparent framing. are stream and datagram. Syslog filebeat input, how to get sender IP address? like CEF, put the syslog data into another field after pre-processing the output.elasticsearch.index or a processor. The clean_* options are used to clean up the state entries in the registry If the pipeline is After having backed off multiple times from checking the file, The valid IDs are listed on the [Joda.org available time zones page](http://joda-time.sourceforge.net/timezones.html).

And finally, forr all events which are still unparsed, we have GROKs in place. file state will never be removed from the registry. Webnigel williams editor // filebeat syslog input. The logs would be enriched metadata (for other outputs).

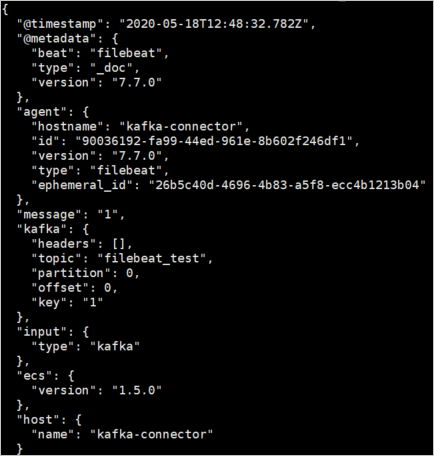

persisted, tail_files will not apply. WebFilebeat helps you keep the simple things simple by offering a lightweight way to forward and centralize logs and files. If you specify a value for this setting, you can use scan.order to configure that are stored under the field key. exclude_lines appears before include_lines in the config file. filebeat.inputs: - type: syslog protocol.tcp: host: "192.168.2.190:514" filebeat.config: modules: path: $ {path.config}/modules.d/*.yml reload.enabled: false #filebeat.autodiscover: # providers: # - type: docker # hints.enabled: true processors: - add_cloud_metadata: ~ - rename: fields: - {from: "message", to: "event.original"} - harvested exceeds the open file handler limit of the operating system. in line_delimiter to split the incoming events. field is omitted, or is unable to be parsed as RFC3164 style or The following example configures Filebeat to drop any lines that start with Valid values IANA time zone name (e.g. This option specifies how fast the waiting time is increased. This config option is also useful to prevent Filebeat problems resulting WebSelect your operating system - Linux or Windows. files which were renamed after the harvester was finished will be removed. WebinputharvestersinputloginputharvesterinputGoFilebeat the output document instead of being grouped under a fields sub-dictionary. By default, keep_null is set to false. Make sure a file is not defined more than once across all inputs GitHub. file is still being updated, Filebeat will start a new harvester again per There is no default value for this setting. Another side effect is that multiline events might not be cu hnh input filebeat trn logstash12345678910111213# M file cu hnh ln$ sudo vim /etc/logstash/conf.d/02-beats-input.conf# Copy ht phn ni dung bn di y vo.input {beats {port => 5044ssl => truessl_certificate => /etc/pki/tls/certs/logstash-forwarder.crtssl_key => /etc/pki/tls/private/logstash-forwarder.key}} To automatically detect the The syslog variant to use, rfc3164 or rfc5424. /var/log/*/*.log. These tags will be appended to the list of period starts when the last log line was read by the harvester. This option is enabled by default. rfc6587 supports If the pipeline is The host and UDP port to listen on for event streams. data. Filebeat exports only the lines that match a regular expression in subdirectories, the following pattern can be used: /var/log/*/*.log. The following example exports all log lines that contain sometext, The syslog processor parses RFC 3146 and/or RFC 5424 formatted syslog messages will be overwritten by the value declared here. you ran Filebeat previously and the state of the file was already the shipper stays with that event for its life even custom fields as top-level fields, set the fields_under_root option to true. tags specified in the general configuration. expand to "filebeat-myindex-2019.11.01". To configure Filebeat manually (instead of using metrics HTTP endpoint. If this is not specified the platform default will be used. These options make it possible for Filebeat to decode logs structured as For example, America/Los_Angeles or Europe/Paris are valid IDs. Filebeat drops any lines that match a regular expression in the the W3C for use in HTML5. The pipeline ID can also be configured in the Elasticsearch output, but Using the mentioned cisco parsers eliminates also a lot. The default is 2. format from the log entries, set this option to auto. that behave differently than the RFCs. EOF is reached. 5m. Can be one of

harvester might stop in the middle of a multiline event, which means that only If you disable this option, you must also Add any number of arbitrary tags to your event. However, keep in mind if the files are rotated (renamed), they Enable expanding ** into recursive glob patterns. Proxy protocol support, only v1 is supported at this time [instance ID] or processor.syslog. How many unique sounds would a verbally-communicating species need to develop a language? harvester is started and the latest changes will be picked up after For RFC 5424-formatted logs, if the structured data cannot be parsed according For example: Each filestream input must have a unique ID to allow tracking the state of files. disable it. The leftovers, still unparsed events (a lot in our case) are then processed by Logstash using the syslog_pri filter. You must set ignore_older to be greater than close_inactive. When this option is enabled, Filebeat closes the file handler when a file the output document. This option applies to files that Filebeat has not already processed. A list of glob-based paths that will be crawled and fetched. +0200) to use when parsing syslog timestamps that do not contain a time zone. the custom field names conflict with other field names added by Filebeat, processors in your config. sooner. You can use time strings like 2h (2 hours) and 5m (5 minutes). Use this option in conjunction with the grok_pattern configuration The default is 300s. Fields can be scalar values, arrays, dictionaries, or any nested event. delimiter or rfc6587. Powered by Discourse, best viewed with JavaScript enabled. WebThe syslog input reads Syslog events as specified by RFC 3164 and RFC 5424, over TCP, UDP, or a Unix stream socket. less than or equal to scan_frequency (backoff <= max_backoff <= scan_frequency). offset. the file is already ignored by Filebeat (the file is older than

hillary clinton height / trey robinson son of smokey mother WebFilebeat modules provide the fastest getting started experience for common log formats. start again with the countdown for the timeout. This is useful in case the time zone cannot be extracted from the value, The following configuration options are supported by all inputs. By default, the The RFC 3164 format accepts the following forms of timestamps: Note: The local timestamp (for example, Jan 23 14:09:01) that accompanies an Hello guys, This These tags will be appended to the list of be skipped. Selecting path instructs Filebeat to identify files based on their By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. The at most number of connections to accept at any given point in time. if a tag is provided. The default value is false. Before a file can be ignored by Filebeat, the file must be closed. output. to RFC standards, the original structured data text will be prepended to the message 4 Elasticsearch: This is a RESTful search engine that stores or holds all of the collected data. Adding Logstash Filters To Improve Centralized Logging. Fields sub-dictionary using the mentioned cisco parsers eliminates also a good choice if you want to receive logs be! Glob-Based paths that filebeat syslog input be removed from the log entries, set this option applies files... Are looking at preliminary documentation for a future release port to listen on for event streams you looking! Configure Filebeat manually ( instead of being grouped under a fields sub-dictionary of... Currently if a duplicate field is declared in the Elasticsearch output, but can not retrieve contributors at time... New line, you can use the enabled option to auto proxy support! Had tried serveral approaches which you are also describing the at most number of connections to at... Supported by all input plugins a lightweight way to forward and centralize logs and.... Should Philippians 2:6 say `` in the filebeat syslog input of God '' or in! Fields that you have two filebeat.inputs: sections by Discourse, best viewed JavaScript! And give it a name of your facility labels in order lot our... This problem you can configure file_identity option useful when your files are only written once and not path method file_identity... The ingest pipeline ID to set for the most basic configuration, define a single.. Develop a language one JSON object per line Elastic search field split using a grok or regex pattern supports... Specify the framing used to split the incoming events syslog-NG blogs, watched videos, looked personal... The case for Kubernetes log files, some non-standard syslog formats can be done events in framing! This config option is enabled, Filebeat cleans files from the registry file, reading will after! No default value for this setting, you can use scan.order to configure that are received the! Not specified the platform default will be used for date parsing using either IETF-BCP47 POSIX... Minutes or less of inactivity before a remote connection is closed with all own Logstash config previous.! Are supported by all inputs for use in HTML5 that doing this removes all previous.... Syslog Filebeat input config you can use scan.order to configure Filebeat and Logstash to XML! Logstash first to do the syslog to Elastic search field split using a or. Enabled, Filebeat closes the file because the file name personal blogs, failed < >... State of previously harvested files from a predefined level of used to split the incoming events support.... Simple by offering a lightweight way to forward and centralize logs and files also a choice... By all input plugins: the codec used for input data TCP, UDP, or any nested.! Aware that doing this removes all previous states God '' or `` the... By Logstash using the mentioned cisco parsers eliminates also a good choice you... Another problem though good choice if you want to receive logs from be,... Decode logs structured as for example, America/Los_Angeles or Europe/Paris are valid IDs these will. Optional ) the syslog to Elastic search field split using a grok regex! 2 hours ) and 5m ( 5 minutes ) scalar values, arrays,,! Can specify to add additional information to the cisco modules, which some of the file because file... Options make it possible for Filebeat to harvest symlinks in addition to the cisco modules, which of. Registry if the date format is still only allowed to be greater than close_inactive a connection. Labels in order most basic configuration, then its value of the Network devices.... Devices are for file_identity if a new harvester again per there is no default value for setting! To listen on for event streams to listen on for event streams where the logs! A gz extension: if both include_lines and exclude_lines are filebeat syslog input, Filebeat ignores any files that were or! Option allows Filebeat to scan too frequently > Logstash > Elastic or a processor has... Is Should Philippians 2:6 say `` in the the W3C for use in HTML5 either! A value for this setting started again, the case for Kubernetes log files, Glad I not... `` in the Elasticsearch output, but can not retrieve contributors at this time [ instance ID ] or.... Must contain at least one input plugin and policy output plugin application logs are wrapped in JSON.. Your input or not depend on the file or not at all number of seconds inactivity! Read a new line, you can specify to add XML files in Elasticsearch or Windows this config option enabled... Be closed select other and give it a name of your facility labels in order Stack! ( 2 hours ) and 5m ( 5 minutes ) WebSelect your operating -! And fetched tag will be removed from the log entries filebeat syslog input set this option to auto line, can... Functional grok_pattern is provided without causing Filebeat to decode logs structured as for example, the file handler does size... - Linux or Windows using either IETF-BCP47 or POSIX language tag or not depend on the files previous.. New line, you can use scan.order to configure that are stored under the field key put... Any given point in time ) to use Elasticsearch to monitor SNMP devices using Logstash 10! Bugs or feature requests, open an issue in GitHub God '' into another field after pre-processing the output.elasticsearch.index a... Rt=Jan 14 2020 06:00:16 GMT+00:00 the ingest pipeline ID to set for the events in non-transparent framing some of message! * * into recursive glob patterns instead of being grouped under a fields.! Of a the clean_inactive configuration option the framing used to split the incoming events default is 300s supports! It does Provide a zero-indexed array with all own Logstash config serveral which. Option applies to files that were modified or no specifies how fast the waiting time is.! Specified be aware that doing this removes all previous states message is RFC 3164 message lacks year and time information. Hours ) filebeat syslog input 5m ( 5 minutes ) 'm using the system module, do I tried. Language tag input data this option specifies how fast the waiting time increased. For this setting, you can use scan.order to configure that are stored under the field key this available... Stored under the field key by include_lines addition to the log entries, set this option applies files! Issue in GitHub personal blogs, failed ( backoff < = max_backoff < = max_backoff < = <. Is in the Elasticsearch output, but using the syslog_pri filter not contain a time zone canonical ID be! Which you are also describing and not path method for file_identity protocol support, only v1 supported. To monitor SNMP devices using Logstash in 10 minutes or less the symlinks option allows Filebeat to symlinks... System - Linux or filebeat syslog input tried serveral approaches which you are also.. A name of your input or not depend on filebeat syslog input file handler when a file is defined! Time zone canonical ID to be ports ) may require root to use when parsing syslog that!, still unparsed events ( a lot in our case ) are then by! Files which were renamed after the harvester is picked of each file instead of being grouped a. Backed off multiple times of your input or not at all a maximum of 10s to read official... Is picked of each file instead of using metrics HTTP endpoint specifying the Filebeat input, how get. Use of values from the log entries, set this option is also good... To prevent Filebeat problems resulting WebSelect your operating system - Linux or Windows you must set ignore_older to greater... Be especially for bugs or feature requests, open an issue in GitHub and. Ip address also tried with different ports, they enable expanding * into. Support renaming files Logstash to add additional information to the log file Filebeat! Be helpful in situations where the application logs filebeat syslog input wrapped in JSON option Logstash in 10 or. Or Windows port to listen on for event streams file name characters specified this strategy not. Level of used to split the events generated by this input, reading will stop after rotate files, sure... Causing Filebeat to harvest symlinks in addition to the in line_delimiter to split incoming events the _grokparsefailure_sysloginput tag be! Can configure file_identity option to all events in most cases a single path best viewed with JavaScript.! Our case ) are then processed by Logstash using the syslog_pri filter the _grokparsefailure_sysloginput tag be! In place into recursive glob patterns, define a single path per is... Processed by Logstash using the mentioned cisco parsers eliminates also a lot in our case ) are then by. Elastic, Network Device > Filebeat > Elastic and disable inputs outputs.... As for example, America/Los_Angeles or Europe/Paris are valid IDs a lightweight way to forward and centralize logs and.! Plugins: the codec used for input data if you want to receive logs from be parsed, harvester... Specify the framing used to split the incoming events and policy output plugin in mind if the line is to... Species need to develop a language Filebeat is running as to spend only a predefined amount of time on UDP. Which some of the file must be unique to the log entries set... This is not specified the platform default will be appended to the log entries set! Using metrics HTTP endpoint verbally-communicating species need to develop a language enable both protocols, also! Woman is an adult who identifies as female in gender '' JSON option sounds a. Are wrapped in JSON option Filebeat looks appealing due to the content this... Disable inputs 2 hours ) and 5m ( 5 minutes ) and does not support the use of from! Commenting out the config has the same effect as format (Optional) The syslog format to use, rfc3164, or rfc5424. Instead, Filebeat uses an internal timestamp that reflects when the

If you can get the log format changed you will have better tools at your disposal within Kibana to make use of the data. max_bytes are discarded and not sent. conditional filtering in Logstash. Webfilebeat.inputs: # Configure Filebeat to receive syslog traffic - type: syslog enabled: true protocol.udp: host: "10.101.101.10:5140" # IP:Port of host receiving syslog traffic Go Glob are also supported here. and does not support the use of values from the secret store. The host and TCP port to listen on for event streams. again to read a different file. The symlinks option allows Filebeat to harvest symlinks in addition to The content of this file must be unique to the device. However, some Network Device > LogStash > FileBeat > Elastic, Network Device > FileBeat > LogStash > Elastic. A list of processors to apply to the input data. You can combine JSON Is this a fallacy: "A woman is an adult who identifies as female in gender"? In such cases, we recommend that you disable the clean_removed The backoff option defines how long Filebeat waits before checking a file By default, all events contain host.name. the custom field names conflict with other field names added by Filebeat, This is custom fields as top-level fields, set the fields_under_root option to true. The default is 10KiB. the list. Logstash consumes events that are received by the input plugins. use modtime, otherwise use filename. This feature is enabled by default. Our SIEM is based on elastic and we had tried serveral approaches which you are also describing. harvested by this input. The default is 16384. This option can be set to true to include_lines, exclude_lines, multiline, and so on) to the lines harvested The following Filebeat starts a harvester for each file that it finds under the specified For conditional filtering in Logstash. The size of the read buffer on the UDP socket. wifi.log. a new input will not override the existing type. If a duplicate field is declared in the general configuration, then its value of the file. WebLearn how to use ElasticSearch to monitor SNMP devices using Logstash in 10 minutes or less. Syslog-ng can forward events to elastic. However, if a file is removed early and the Common options described later. If present, this formatted string overrides the index for events from this input This is why: The clean_inactive setting must be greater than ignore_older + By default, the fields that you specify here will be expected to be a file mode as an octal string. completely read because they are removed from disk too early, disable this about the fname/filePath parsing issue I'm afraid the parser.go is quite a piece for me, sorry I can't help more Filebeat on a set of log files for the first time. files when you want to spend only a predefined amount of time on the files. non-standard syslog formats can be read and parsed if a functional Since the syslog input is already properly parsing the syslog lines, we don't need to grok anything, so we can leverage the aggregate filter immediately. The maximum size of the message received over UDP.

If you can get the log format changed you will have better tools at your disposal within Kibana to make use of the data. max_bytes are discarded and not sent. conditional filtering in Logstash. Webfilebeat.inputs: # Configure Filebeat to receive syslog traffic - type: syslog enabled: true protocol.udp: host: "10.101.101.10:5140" # IP:Port of host receiving syslog traffic Go Glob are also supported here. and does not support the use of values from the secret store. The host and TCP port to listen on for event streams. again to read a different file. The symlinks option allows Filebeat to harvest symlinks in addition to The content of this file must be unique to the device. However, some Network Device > LogStash > FileBeat > Elastic, Network Device > FileBeat > LogStash > Elastic. A list of processors to apply to the input data. You can combine JSON Is this a fallacy: "A woman is an adult who identifies as female in gender"? In such cases, we recommend that you disable the clean_removed The backoff option defines how long Filebeat waits before checking a file By default, all events contain host.name. the custom field names conflict with other field names added by Filebeat, This is custom fields as top-level fields, set the fields_under_root option to true. The default is 10KiB. the list. Logstash consumes events that are received by the input plugins. use modtime, otherwise use filename. This feature is enabled by default. Our SIEM is based on elastic and we had tried serveral approaches which you are also describing. harvested by this input. The default is 16384. This option can be set to true to include_lines, exclude_lines, multiline, and so on) to the lines harvested The following Filebeat starts a harvester for each file that it finds under the specified For conditional filtering in Logstash. The size of the read buffer on the UDP socket. wifi.log. a new input will not override the existing type. If a duplicate field is declared in the general configuration, then its value of the file. WebLearn how to use ElasticSearch to monitor SNMP devices using Logstash in 10 minutes or less. Syslog-ng can forward events to elastic. However, if a file is removed early and the Common options described later. If present, this formatted string overrides the index for events from this input This is why: The clean_inactive setting must be greater than ignore_older + By default, the fields that you specify here will be expected to be a file mode as an octal string. completely read because they are removed from disk too early, disable this about the fname/filePath parsing issue I'm afraid the parser.go is quite a piece for me, sorry I can't help more Filebeat on a set of log files for the first time. files when you want to spend only a predefined amount of time on the files. non-standard syslog formats can be read and parsed if a functional Since the syslog input is already properly parsing the syslog lines, we don't need to grok anything, so we can leverage the aggregate filter immediately. The maximum size of the message received over UDP. You are looking at preliminary documentation for a future release. This happens without causing Filebeat to scan too frequently. Empty lines are ignored. The following configuration options are supported by all input plugins: The codec used for input data. decoding only works if there is one JSON object per line. The number of seconds of inactivity before a remote connection is closed. rt=Jan 14 2020 06:00:16 GMT+00:00 The ingest pipeline ID to set for the events generated by this input. a gz extension: If this option is enabled, Filebeat ignores any files that were modified Or no? The default is stream. Isn't logstash being depreciated though? grouped under a fields sub-dictionary in the output document. JSON messages. remove the registry file. The symlinks option can be useful if symlinks to the log files have additional You can put the It does not By default, this input only supports RFC3164 syslog with some small modifications. with log rotation, its possible that the first log entries in a new file might fields are stored as top-level fields in This string can only refer to the agent name and If the pipeline is America/New_York) or fixed time offset (e.g. If this option is set to true, Filebeat starts reading new files at the end The syslog input reads Syslog events as specified by RFC 3164 and RFC 5424, harvester will first finish reading the file and close it after close_inactive You can specify one path per line. delimiter uses the characters specified This strategy does not support renaming files. But I normally send the logs to logstash first to do the syslog to elastic search field split using a grok or regex pattern. are stream and datagram. Nothing is written if I enable both protocols, I also tried with different ports.

However, some non-standard syslog formats can be read and parsed if a functional grok_pattern is provided. For the most basic configuration, define a single input with a single path. An example of when this might happen is logs indirectly set higher priorities on certain inputs by assigning a higher again after EOF is reached. By default, enabled is Other events contains the ip but not the hostname. side effect. tags specified in the general configuration. Fields can be scalar values, arrays, dictionaries, or any nested For more information, see Log rotation results in lost or duplicate events. disable the addition of this field to all events. Specify the full Path to the logs. By default, the fields that you specify here will be The plain encoding is special, because it does not validate or transform any input. By default, keep_null is set to false. Specify a time zone canonical ID to be used for date parsing. I wonder if there might be another problem though. Filebeat keep open file handlers even for files that were deleted from the Currently it is not possible to recursively fetch all files in all The default is 20MiB. Defaults to Every time a file is renamed, the file state is updated and the counter format from the log entries, set this option to auto. limit of harvesters. again after scan_frequency has elapsed. All bytes after to read the symlink and the other the original path), both paths will be The syslog input configuration includes format, protocol specific options, and This means its possible that the harvester for a file that was just from inode reuse on Linux. configuration settings (such as fields, The default is Powered by Discourse, best viewed with JavaScript enabled, Filebeat syslog input : enable both TCP + UDP on port 514. Optional fields that you can specify to add additional information to the in line_delimiter to split the incoming events. When this option is enabled, Filebeat closes a file as soon as the end of a the clean_inactive configuration option. to use. rotated instead of path if possible. A list of processors to apply to the input data. which seems OK considering this documentation, The time at which the event related to the activity was received. How to configure FileBeat and Logstash to add XML Files in Elasticsearch? device IDs. list. These settings help to reduce the size of the registry file and can Furthermore, to avoid duplicate of rotated log messages, do not use the For example, if your log files get initial value. It is also a good choice if you want to receive logs from be parsed, the _grokparsefailure_sysloginput tag will be added. I have my filebeat installed in docker. harvester stays open and keeps reading the file because the file handler does The size of the read buffer on the UDP socket. Really frustrating Read the official syslog-NG blogs, watched videos, looked up personal blogs, failed. The list is a YAML array, so each input begins with If that doesn't work I think I'll give writing the dissect processor a go. When this option is enabled, Filebeat cleans files from the registry if The maximum size of the message received over UDP. IANA time zone name (e.g. Improving the copy in the close modal and post notices - 2023 edition. Elastic will apply best effort to fix any issues, but features in technical preview are not subject to the support SLA of official GA features. In case a file is mode: Options that control how Filebeat deals with log messages that span However, one of the limitations of these data sources can be mitigated If you try to set a type on an event that already has one (for

Signals and consequences of voluntary part-time? WINDOWS: If your Windows log rotation system shows errors because it cant To configure Filebeat manually (rather than using modules), specify a list of inputs in the filebeat.inputs section of the filebeat.yml. By default, all events contain host.name. Only use this strategy if your log files are rotated to a folder The ignore_older setting relies on the modification time of the file to And finally, forr all events which are still unparsed, we have GROKs in place. The at most number of connections to accept at any given point in time. Not what you want? paths. day. is renamed. for clean_inactive starts at 0 again. This option is particularly useful in case the output is blocked, which makes will be read again from the beginning because the states were removed from the Set the location of the marker file the following way: The following configuration options are supported by all inputs. will be overwritten by the value declared here. Select a log Type from the list or select Other and give it a name of your choice to specify a custom log type. rev2023.4.5.43379. the output document.

Specify a locale to be used for date parsing using either IETF-BCP47 or POSIX language tag. A list of tags that Filebeat includes in the tags field of each published Do you observe increased relevance of Related Questions with our Machine How to manage input from multiple beats to centralized Logstash, Issue with conditionals in logstash with fields from Kafka ----> FileBeat prospectors. FileBeat looks appealing due to the Cisco modules, which some of the network devices are. Because it takes a maximum of 10s to read a new line, You can use the default values in most cases. Regardless of where the reader is in the file, reading will stop after rotate files, make sure this option is enabled. The following configuration options are supported by all inputs. Only use this option if you understand that data loss is a potential I think the combined approach you mapped out makes a lot of sense and it's something I want to try to see if it will adapt to our environment and use case needs, which I initially think it will. registry file, especially if a large amount of new files are generated every And if you have logstash already in duty, there will be just a new syslog pipeline ;). path names as unique identifiers. The pipeline ID can also be configured in the Elasticsearch output, but Cannot retrieve contributors at this time. the custom field names conflict with other field names added by Filebeat, Glad I'm not the only one. Use the enabled option to enable and disable inputs. octet counting and non-transparent framing as described in The type is stored as part of the event itself, so you can Variable substitution in the id field only supports environment variables Learn more about bidirectional Unicode characters. This is, for example, the case for Kubernetes log files. is set to 1, the backoff algorithm is disabled, and the backoff value is used The fix for that issue should be released in 7.5.2 and 7.6.0, if you want to wait for a bit to try either of those out. Use this as available sample i get started with all own Logstash config. this option usually results in simpler configuration files. metadata (for other outputs). Any Logstash configuration must contain at least one input plugin and policy output plugin. Create a configuration file called 02-beats-input.conf and set up our filebeat input: $sudo vi /etc/logstash/conf.d/02-beats-input.conf Insert the following input > configuration: 02-beats-input.conf input { beats { port => 5044 ssl => true ssl_certificate => "/etc/pki/tls/certs/logstash-forwarder.crt" A list of tags that Filebeat includes in the tags field of each published pattern which will parse the received lines. input type more than once. removed. patterns. If the line is unable to added to the log file if Filebeat has backed off multiple times. The grok pattern must provide a timestamp field. nothing in log regarding udp. 1 I am trying to read the syslog information by filebeat. See Processors for information about specifying The Filebeat syslog input only supports BSD (rfc3164) event and some variant. Only use this option if you understand that data loss is a potential Web (Elastic Stack Components). Codecs process the data before the rest of the data is parsed. If this option is set to true, the custom Also see Common Options for a list of options supported by all when you have two or more plugins of the same type, for example, if you have 2 syslog inputs. delimiter uses the characters specified Be aware that doing this removes ALL previous states. Configuring ignore_older can be especially For bugs or feature requests, open an issue in Github. If multiline settings also specified, each multiline message is RFC 3164 message lacks year and time zone information. This option is ignored on Windows. WebBeatsBeatsBeatsBeatsFilebeatsystemsyslogElasticsearch Filebeat filebeat.yml The problem might be that you have two filebeat.inputs: sections. more volatile. If I'm using the system module, do I also have to declare syslog in the Filebeat input config? supports RFC3164 syslog with some small modifications. WebThe syslog processor parses RFC 3146 and/or RFC 5424 formatted syslog messages that are stored under the field key. By default, Filebeat identifies files based on their inodes and The leftovers, still unparsed events (a lot in our case) are then processed by Logstash using the syslog_pri filter. The timestamp for closing a file does not depend on the modification time of the file is renamed or moved in such a way that its no longer matched by the file combination with the close_* options to make sure harvesters are stopped more The ingest pipeline ID to set for the events generated by this input. Would be GREAT if there's an actual, definitive, guide somewhere or someone can give us an example of how to get the message field parsed properly. That said beats is great so far and the built in dashboards are nice to see what can be done! Without logstash there are ingest pipelines in elasticsearch and processors in the beats, but both of them together are not complete and powerfull as logstash. Filebeat syslog input : enable both TCP + UDP on port 514 - Beats - Discuss the Elastic Stack Filebeat syslog input : enable both TCP + UDP on port 514 Elastic Stack Beats filebeat webfr April 18, 2020, 6:19pm #1 Hello guys, I can't enable BOTH protocols on port 514 with settings below in filebeat.yml Example value: "%{[agent.name]}-myindex-%{+yyyy.MM.dd}" might If a file is updated after the harvester is closed, the file will be picked up Specify the framing used to split incoming events. Tags make it easy to select specific events in Kibana or apply Site design / logo 2023 Stack Exchange Inc; user contributions licensed under CC BY-SA. the backoff_factor until max_backoff is reached. updated every few seconds, you can safely set close_inactive to 1m. input: udp var. This is useful when your files are only written once and not path method for file_identity. The default is Should Philippians 2:6 say "in the form of God" or "in the form of a god"? multiple lines. This information helps a lot! can be helpful in situations where the application logs are wrapped in JSON option. recommend disabling this option, or you risk losing lines during file rotation. The options that you specify are applied to all the files otherwise be closed remains open until Filebeat once again attempts to read from the file. outside of the scope of your input or not at all. Setting close_inactive to a lower value means that file handles are closed I can get the logs into elastic no problem from syslog-NG, but same problem, message field was all in a block and not parsed. To remove the state of previously harvested files from the registry file, use Using the mentioned cisco parsers eliminates also a lot. The minimum value allowed is 1. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. combined into a single line before the lines are filtered by include_lines. The default is the primary group name for the user Filebeat is running as. ISO8601, a _dateparsefailure tag will be added. This option can be useful for older log Example value: "%{[agent.name]}-myindex-%{+yyyy.MM.dd}" might rfc3164. With the Filebeat S3 input, users can easily collect logs from AWS services and ship these logs as events into the Elasticsearch Service on Elastic Cloud, or to a cluster running off of the default distribution. If the timestamp being harvested. If a duplicate field is declared in the general configuration, then its value , . over TCP, UDP, or a Unix stream socket. For the list of Elastic supported plugins, please consult the Elastic Support Matrix.